Little while back I rebuilt my RAID array (went from RAID10 to RAID6 because I needed more capacity) and couldn’t find any information online about what the actual performance hit is to the array when a background initialization is happening.

The Dell software says there is a minor performance impact during the operation and you can still use your system as per-usual. I was curious though, how much of an impact was there? I ran some CrystalDiskMarks during and after the process for fun.

The disks I am using are 10TB Seagate Ironwolfs (7200RPM, CMR)

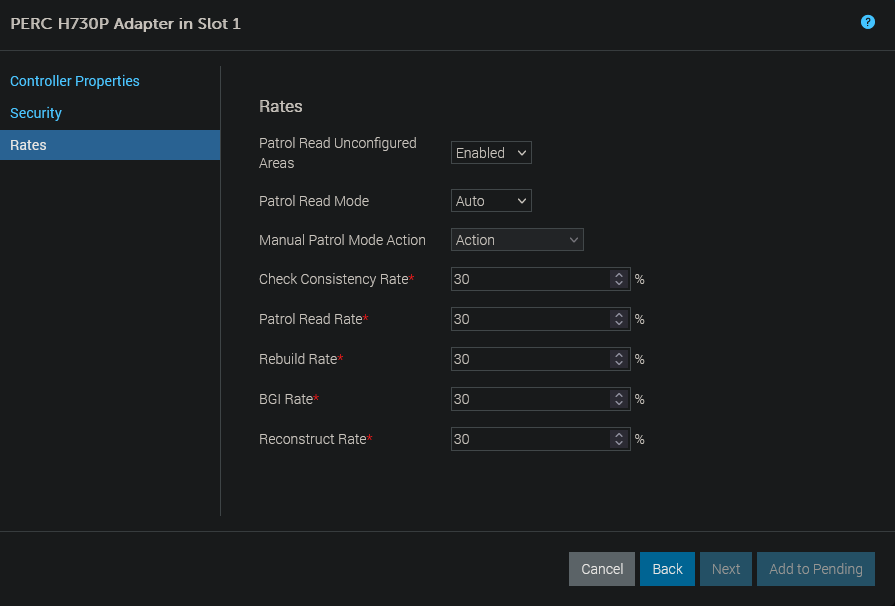

Here are my PERC Controllers settings:

Here is the CrystalDiskMark info during the background initialization with the following test settings:

Test Count: 5

Test Size 1GiB (PERC has a 2GB cache so it was likely in play during these)

| Read during (MB/s) | Read after (MB/s) | % Improvement | Write during (MB/sec) | Write after (MB/sec) | % Improvement | Read during (IOPS) | Read after (IOPS) | % Improvement | Write during (IOPS) | Write after (IOPS) | % Improvement | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| QEC1M Q8T1 | 1082.30 | 1379.37 | 27.45 | 938.86 | 1067.59 | 13.71 | 1032.16 | 1315.47 | 27.45 | 895.37 | 1018.13 | 13.71 |

| SEQ1M Q1T1 | 669.67 | 1157.87 | 72.90 | 906.00 | 947.21 | 4.55 | 638.65 | 1104.23 | 72.90 | 864.03 | 903.33 | 4.55 |

| RND4K Q32T1 | 69.65 | 64.82 | -6.93 | 65.98 | 53.76 | -18.52 | 17005.37 | 15825.93 | -6.94 | 16108.15 | 13124.27 | -18.52 |

| RND4K Q1T1 | 6.84 | 30.04 | 339.18 | 28.76 | 44.43 | 54.49 | 1668.95 | 7333.98 | 339.44 | 7021.73 | 10846.44 | 54.47 |

| Average | 108.15 | 13.56 | 108.21 | 13.55 |

Test Count: 5

Test Size 8GiB (these numbers should better reflect the actual disk performance)

| Read during (MB/s) | Read after (MB/s) | % Improvement | Write during (MB/sec) | Write after (MB/sec) | % Improvement | Read during (IOPS) | Read after (IOPS) | % Improvement | Write during (IOPS) | Write after (IOPS) | % Improvement | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| QEC1M Q8T1 | 857.75 | 988.56 | 15.25 | 876.61 | 908.28 | 3.61 | 818.02 | 942.79 | 15.25 | 836.03 | 866.20 | 3.61 |

| SEQ1M Q1T1 | 522.62 | 940.35 | 79.93 | 846.92 | 911.23 | 7.59 | 527.02 | 896.79 | 70.16 | 807.69 | 869.02 | 7.59 |

| RND4K Q32T1 | 10.94 | 13.38 | 22.30 | 19.87 | 20.04 | 0.86 | 2670.90 | 3267.33 | 22.33 | 4851.07 | 4893.31 | 0.87 |

| RND4K Q1T1 | 1.13 | 1.43 | 26.55 | 12.86 | 14.80 | 15.09 | 274.90 | 350.10 | 27.36 | 3139.65 | 3613.53 | 15.09 |

| Average | 36.01 | 6.79 | 33.78 | 6.79 |

Bottom line, looks like there is roughly a 30% performance hit while a background initialization is running which is pretty close to the configured rates on the PERC controller.

Hey mate,

I was looking for someone to run tests and check how much background processes affect performance. However, I only started looking into this after I crashed a mission-critical server.

The server had 8x 1.92TB SSDs in RAID10. I added 2 more drives and started the expansion at 30%. The interesting part was that I had FastPath enabled on the initial 8 drives, and when the reconstruction started, FastPath apparently turned off. The resulting performance degradation was so significant that it crashed our website.